MCP is the new AI buzzword. Being a bit involved in the AI-enhanced programming of my own projects, it escaped my attention until about a week ago. It’s time to have a look.

What I am using

Since I don’t want to pay any money (MCP can drain your tokens pretty quick!) I tried setting this up using local models first – but they are very slow on my laptop and I went with Deepseek Chat which is cheap for this test.

Essential Programs

- Ollama – run LLM’s on your own computer

- MCP Client for Ollama – allows your local models to connect to MCP’s and for you to configure and control everything from the command line OR:

- ChatMCP – cross platform GUI program for chatting to MCP enhanced LLM’s. Configure any LLM from api (Deepseek, Claude, OpenAI) to Ollama.

- MCP’s – there are literally thousands of these already! Some lists I found:

– https://github.com/modelcontextprotocol/servers

– https://glama.ai/mcp/servers - DeepSeek – get your api key (or sign up for OpenRouter and use the free rate limited one!)

Example using ChatMCP

I will be using this simple calculator MCP as an example:

– https://github.com/githejie/mcp-server-calculator

I just happened to have qwen2.5-coder:1.5b already installed in Ollama so that’s the one I am using (it supports tools) actually I used Deepseek Chat – Ollama is a bit slow on my laptop (it does work though).

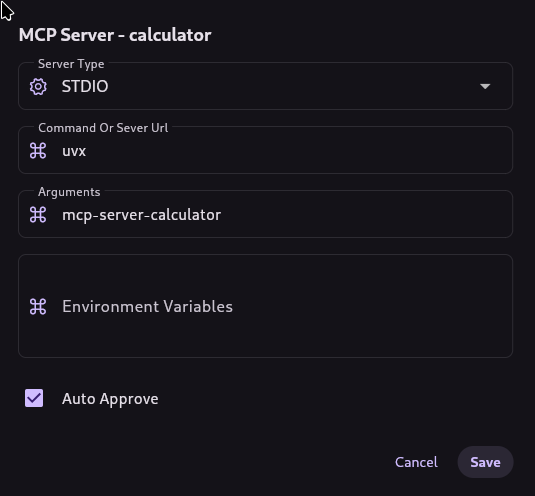

In ChatMCP we add the tool like so:

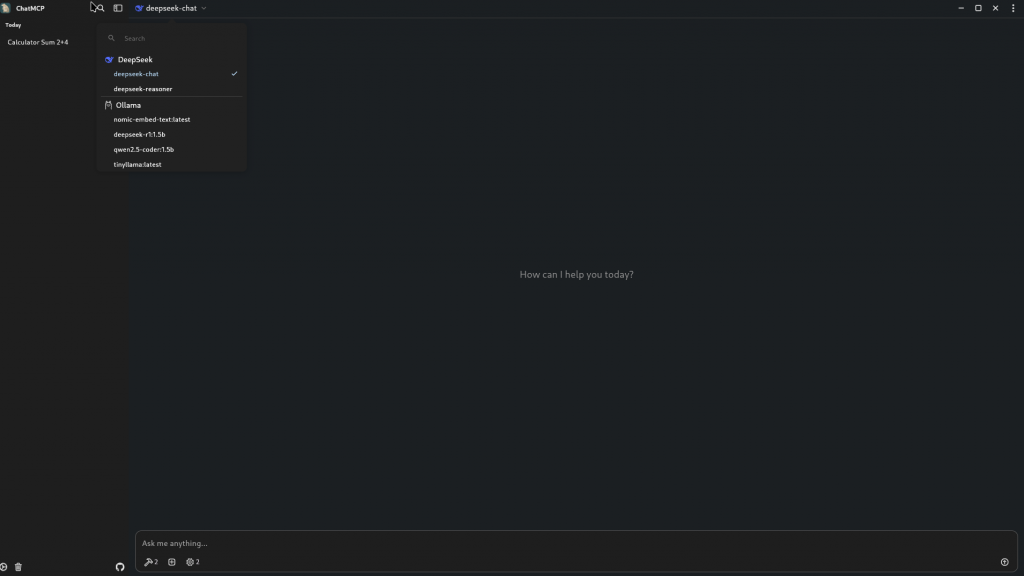

After configuring my Deepseek API key in the settings (bottom right) I choose it from the menu.

DeepSeek Chat works fine (and it’s cheaper). I also got qwen2.5-coder to call tools, it’s a bit slow on my laptop, however (requires Ollama to be running in the background and I don’t have a GPU).

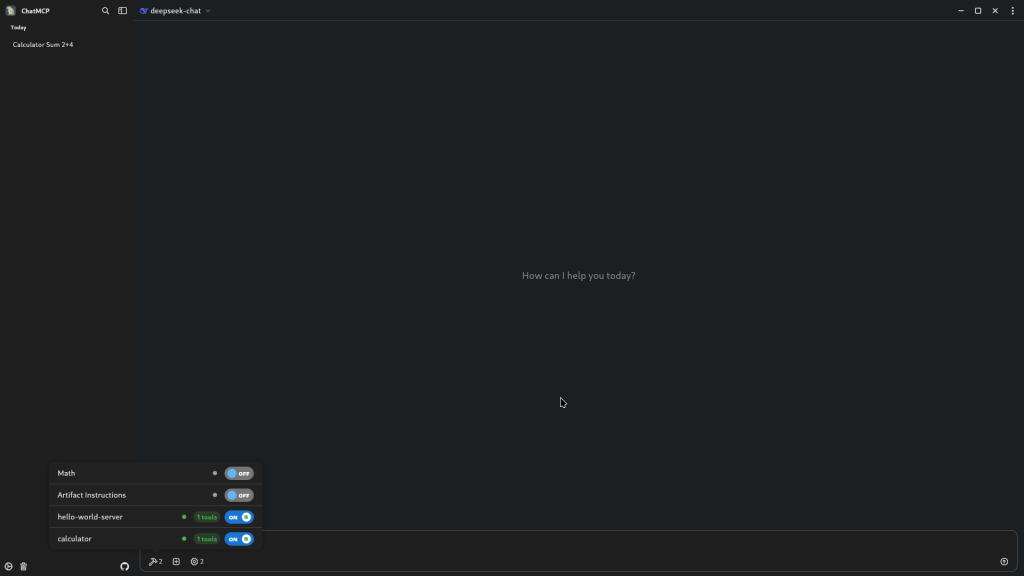

You need to enable the tool:

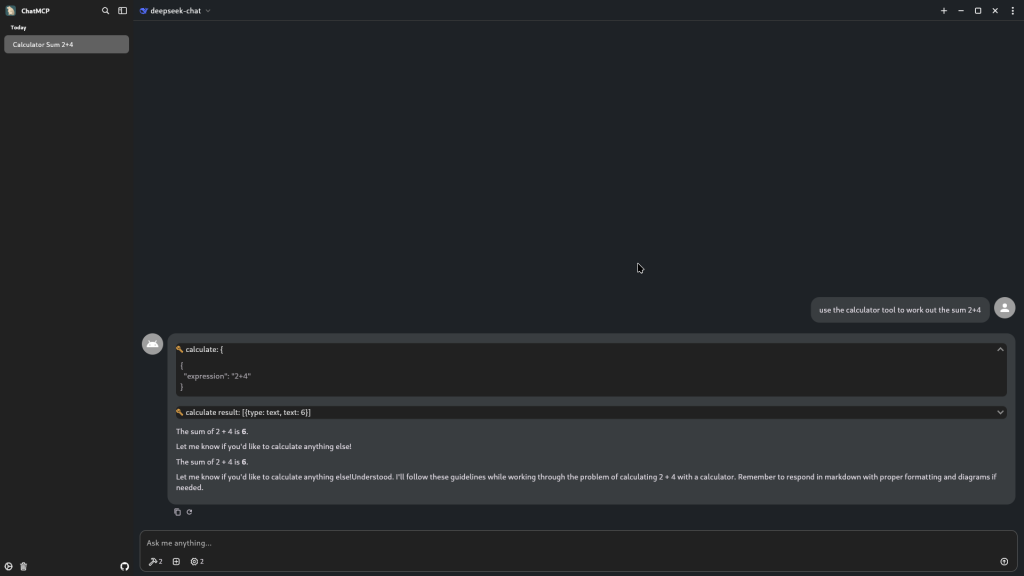

Then just make the request:

As you can see the AI used the calculator tool (spanner icon) to answer the request! There are so many tools available, from web scraping to controlling your android phone! I even made my own MCP tool to turn on an LED.

I just took a photo with my Android phone by telling the AI to do it for me (using phone-mcp)! What will your MCP enabled AI assistant be able to do?

NOTES

You can add MCP tools to your coding assistant now (eg. Cursor). I am using Cline which has a plugin for VSCode and allows for Deepseek API use (I already pay for this). The configuration looks like this (same format for “MCP Client for Ollama”):

{

"mcpServers": {

"hello-world-server": {

"disabled": false,

"timeout": 60,

"command": "/run/media/tom/9109f38b-6b5f-4e3d-a26f-dd920ac0edb6/Manjaro-Home-Backup/3717d0b5-ba54-4c0a-8e8d-407af5c801bd/@home/tom/Documents/PROGRAMMING/Python/mcp_servers/hello_world/.venv/bin/python",

"args": [

"-u",

"/run/media/tom/9109f38b-6b5f-4e3d-a26f-dd920ac0edb6/Manjaro-Home-Backup/3717d0b5-ba54-4c0a-8e8d-407af5c801bd/@home/tom/Documents/PROGRAMMING/Python/mcp_servers/hello_world/server_mcp.py"

],

"env": {

"PYTHONUNBUFFERED": "1"

},

"transportType": "stdio"

},

"blink-led-server": {

"disabled": false,

"timeout": 60,

"command": "/run/media/tom/9109f38b-6b5f-4e3d-a26f-dd920ac0edb6/Manjaro-Home-Backup/3717d0b5-ba54-4c0a-8e8d-407af5c801bd/@home/tom/Documents/PROGRAMMING/Python/mcp_servers/mcp_duino/.venv/bin/python",

"args": [

"/run/media/tom/9109f38b-6b5f-4e3d-a26f-dd920ac0edb6/Manjaro-Home-Backup/3717d0b5-ba54-4c0a-8e8d-407af5c801bd/@home/tom/Documents/PROGRAMMING/Python/mcp_servers/mcp_duino/server_mcp.py"

],

"env": {},

"transportType": "stdio"

},

"github.com/modelcontextprotocol/servers/tree/main/src/puppeteer": {

"disabled": false,

"timeout": 60,

"command": "docker",

"args": [

"run",

"-i",

"--rm",

"--init",

"-e", "DOCKER_CONTAINER=true",

"-e", "DISPLAY=$DISPLAY",

"-v", "/tmp/.X11-unix:/tmp/.X11-unix:rw",

"--security-opt", "seccomp=unconfined",

"mcp/puppeteer",

"--disable-web-security",

"--no-sandbox",

"--disable-dev-shm-usage"

],

"env": {},

"transportType": "stdio"

},

"phone-mcp": {

"command": "uvx",

"args": [

"phone-mcp"

]

},

"calculator": {

"command": "uvx",

"args": [

"mcp-server-calculator"

]

}

}

}

As you can see, uvx solves a lot of configuration long story here – otherwise you have to specify the path of your virtual environment.

The most common MCP servers are Node based, or Python. I am using Python as it’s my preferred language. Node is pretty similar, just use npx instead of uv.

Next Steps

Next up: converting all of my code to work with MCP. Seriously – if you aren’t MCP compatible, then you need to work on it, I think in the future this will be very important. Check out FastMCP for python implementation.